Today, we're introducing AI SAST that detects complex business logic and architecture flaws while reducing false positives by up to 95%. It works by orchestrating multiple AI agents to reason about code the way a security engineer does.

It is built with the same philosophy that powers Endor Labs’ software composition analysis (SCA), which achieved an average 92% reduction in false positives for teams at Atlassian, Cursor, Dropbox, OpenAI, Peloton, Robinhood, Snowflake, and more. Now that same approach applies to the code you write.

Industry benchmarks show legacy static application security testing (SAST) tools experience 68-78% false positive rates on average for common languages, while users report false positives reaching as high as 95% on production code. That means at least 3 out of 4 alerts are wrong. Security teams spend 15-30 minutes triaging each false positive on average while real vulnerabilities take 4 hours or more to fix. Today enterprises face over one million alerts a year. At that scale, the math doesn't work.

Worse, the noise masks a critical gap: legacy rule-based SAST tools miss complex vulnerabilities and have high-rates of false negatives. Business logic flaws, broken access control, and insecure design practices slip into production and can cause the most damage.

To solve these challenges, Endor Labs orchestrates multiple specialized agents, each with access to different analytical tools, working together to understand code from every angle: syntax parsing to detect insecure patterns, dataflow to trace information movement, and multi-pass reasoning to understand intent and context. This multi-modal approach enables Endor Labs to reason about code the way your security team does, surfacing sophisticated vulnerabilities that evade conventional tools while distinguishing between true and false positives.

AI SAST is currently available in private preview to customers in our design partner program.

How it works

Understanding code requires multiple analytical lenses working together. Rules parse syntax to detect known patterns. Dataflow analysis reveals how information moves through the application. AI reasoning understands intent and context. No single approach sees the whole picture, but together they build context about how an application works.

This multi-modal approach replicates the same process security teams use manually: read the code, trace the dataflow, reason about business logic. But at enterprise scale—reviewing 1,000 pull requests across 5,000 repositories daily—manual analysis becomes impossible. Every percentage point of false positives creates hours of wasted work.

Building the foundation with tools

To enable this analysis, we've built specialized tools that help our agents rapidly assemble code context. This includes the Endor Labs Code API, a proprietary tool that builds a semantic model of the code, on top of which callgraphs, dataflow, and code search operations can be performed quickly. This helps agents understand multi-file and multi-function interactions. Other tools include access to Opengrep, Endor Labs’ policy engine, and more.

Unlike approaches that dump entire codebases into an LLM, which burns tokens and quickly becomes prohibitively costly at enterprise scale, this approach strategically targets LLMs where semantic understanding is critical. In early testing with five enterprise customers, this allowed us to automatically triage every finding, rather than requiring users to manually trigger analysis.

Orchestrating code analysis

Multi-modal analysis orchestrates multiple agents, each scoped for a specific security task. We use multi-pass analysis with different agents to drive extremely high noise reduction while keeping costs contained. Our SAST workflow consists of different stages with each stage operationalizing one or more agents:

- Detection: Uses the Code API to identify how untrusted data flows through an application and detect vulnerabilities, including architecture and business logic flaws like IDORs.

- Triage: Applies multi-modal analysis to remove false positives, comparing dataflow findings with insecure patterns detected by the Opengrep tool.

- Remediation: Leverages the Code API to generate context-aware fixes that resolve vulnerabilities without introducing regressions.

These agents work alongside our AI Security Code Review agents that assess pull requests for architecture design and business logic flaws that impact your security posture.

For every finding, the system:

- Classifies it as true positive, false positive, or unknown: This works across the full OWASP 2025 Top 10, including categories like broken access control and insecure design that have historically been difficult for SAST tools to detect accurately

- Provides analysis explaining the classification decision: Understanding the reasoning behind each classification helps your team learn and build trust in the system

- Recommends a fix that resolves the vulnerability: Going beyond detection to actually suggest remediation

This classification becomes another control in your security workflow, just like severity filters, confidence levels, and file-based exceptions you may already use.

The system also adapts to your organization through custom prompts so you can teach it about your unique security requirements, architectural patterns, and risk priorities. Combined with user feedback on classifications, the system continuously adapts, making findings more aligned with how your security team actually thinks about risk.

The result is an application security platform that understands both how your code works and what matters to your organization.

Results

Early testing in private repositories with five enterprise partners across technology, data, and security industries demonstrated the impact and benefits of this approach.

Business Logic Flaw Detection

A classic logic flaw rule-based SAST misses is Insecure Direct Object Reference (IDOR). For example, an API endpoint like /api/documents/{documentId} retrieves a document based solely on the ID in the URL, without verifying whether the authenticated user actually owns or has permission to access that document. If the application only checks that you're logged in but doesn't validate object-level permissions, an attacker can simply increment the document ID or use enumeration to access other users' sensitive files.

During testing, Endor Labs' successfully identified IDOR vulnerabilities that traditional SAST tools had missed entirely, demonstrating how semantic understanding of authorization flows catches what pattern matching cannot. This matters more than ever: according to OWASP as part of their research into the top 10 application risks, 100% of applications tested had some form of broken access control, making it the most critical and pervasive security issue facing modern applications.

Security Reasoning

One example that surfaced during testing was SHA-1, a deprecated cryptographic hash function. Most scanners flag every instance as a vulnerability. Endor Labs multi-modal analysis understands not just what the code does, but why it does it.

In most cases, it correctly identifies the usage of SHA-1 as insecure. But when it encounters validation code implementing ASN.1 DER encoding per the SHA-1 specification, where SHA-1 is the required digest algorithm, it correctly classifies the finding as a false positive and explains why.

Benchmark Testing

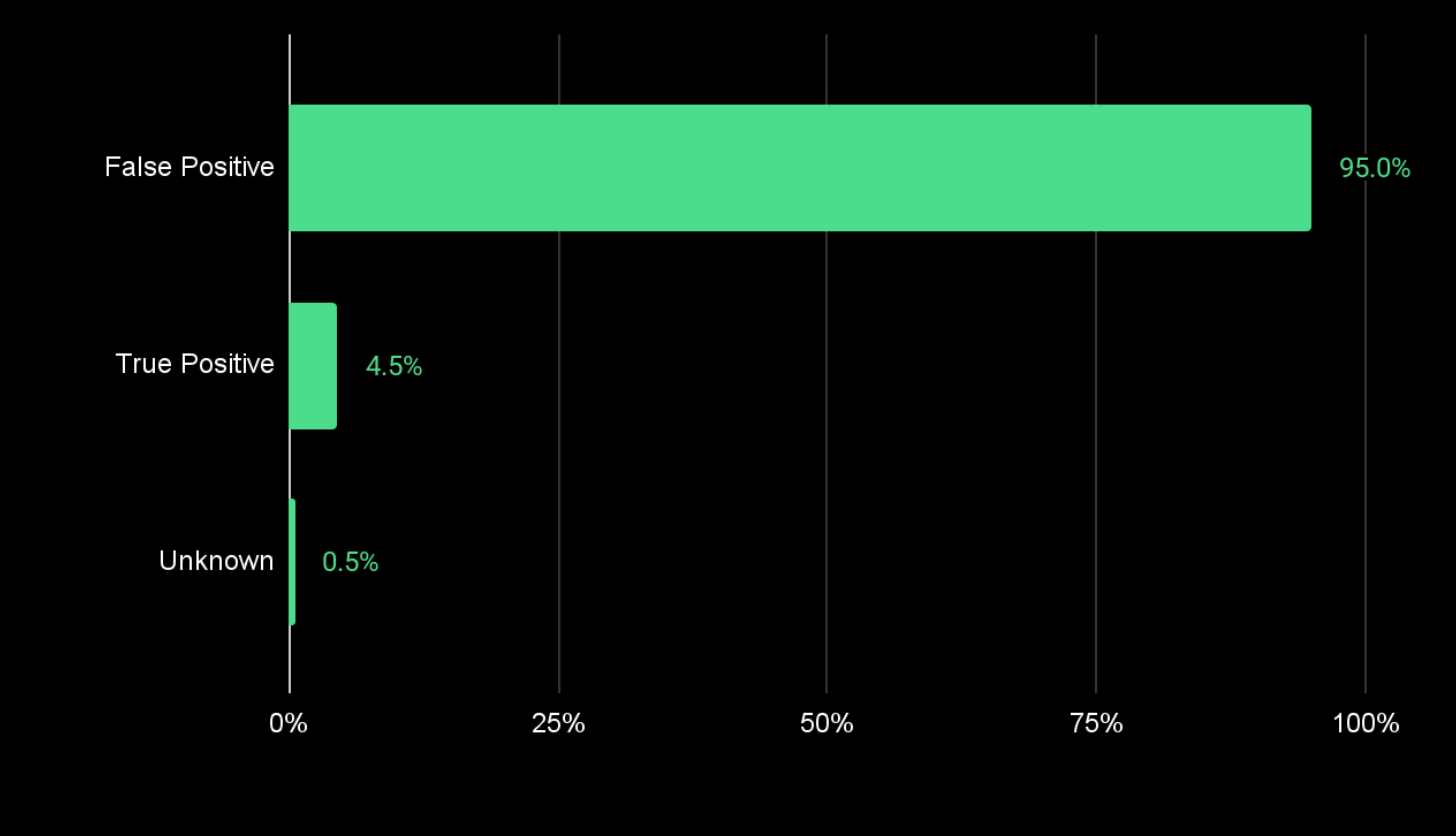

Early benchmark testing in customer environments and against open source SAST alternatives show that Endor Labs accurately identified:

- 95% of findings as false positives: eliminating noise before it reaches your team

- 4.5% of findings as true positives: the vulnerabilities that actually matter

- 0.5% of findings as unknown: cases where the system needs more context

These results mean your team can focus on the 5% of findings that matter instead of manually triaging every single one to classify it as a true or false positive. These preliminary findings have held up in production customer environments, and we’ll publish a full benchmarking report and guide soon.

Why it matters

Legacy SAST tools promised early detection but consistently failed to deliver. Most flood teams with false positives, miss nuanced logic flaws, and struggle with the complexity of modern codebases that are dependency rich and increasingly mix human and AI-written code.

Security tooling either enables engineering velocity or becomes a bottleneck that slows the delivery of new features. When you're shipping thousands of changes daily across hundreds of repositories, manually clicking through a feed of security findings isn't just tedious, it's impossible.

Endor Labs solves this by automating the layered reasoning your security team already does. Every finding is automatically triaged and classified before it reaches your team. And it’s fully integrated with our enterprise-ready application security platform featuring:

- Policy-driven automation: AI SAST is fully integrated with Endor Labs' policy engine, powered by Open Policy Agent (OPA). Use built-in policies or write your own to surface only high-confidence findings, automatically suppress false positives, or block risky pull requests.

- API-first integration: The Endor Labs application security platform integrates seamlessly into your existing CI/CD pipelines and security workflows, giving you programmatic access to findings, classifications, and policy decisions.

The business impact is immediate: faster PR reviews, fewer context switches for developers, and measurable reduction in security debt. Security becomes an enabler of velocity rather than a bottleneck.

Availability

AI SAST is available now through our design partner program. We're working with early customers to validate and refine these capabilities in production environments.

Book a demo to request early access and to learn more.

Detect and block malware

What's next?

When you're ready to take the next step in securing your software supply chain, here are 3 ways Endor Labs can help:

.avif)