SAST that thinks like a security engineer

Intelligent static analysis that understands how your code works and what matters to your organization. Know what’s exploitable, what’s not, and how to fix it.

How it works

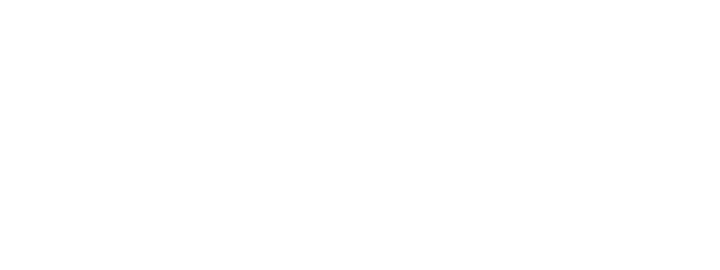

Cut through the noise

Automatically triage false positives using AI code analysis, including multi-file and multi-function dataflow validation.

Identify complex flaws

Go beyond traditional rule-based scanning to detect complex vulnerabilities like business logic and authentication flaws.

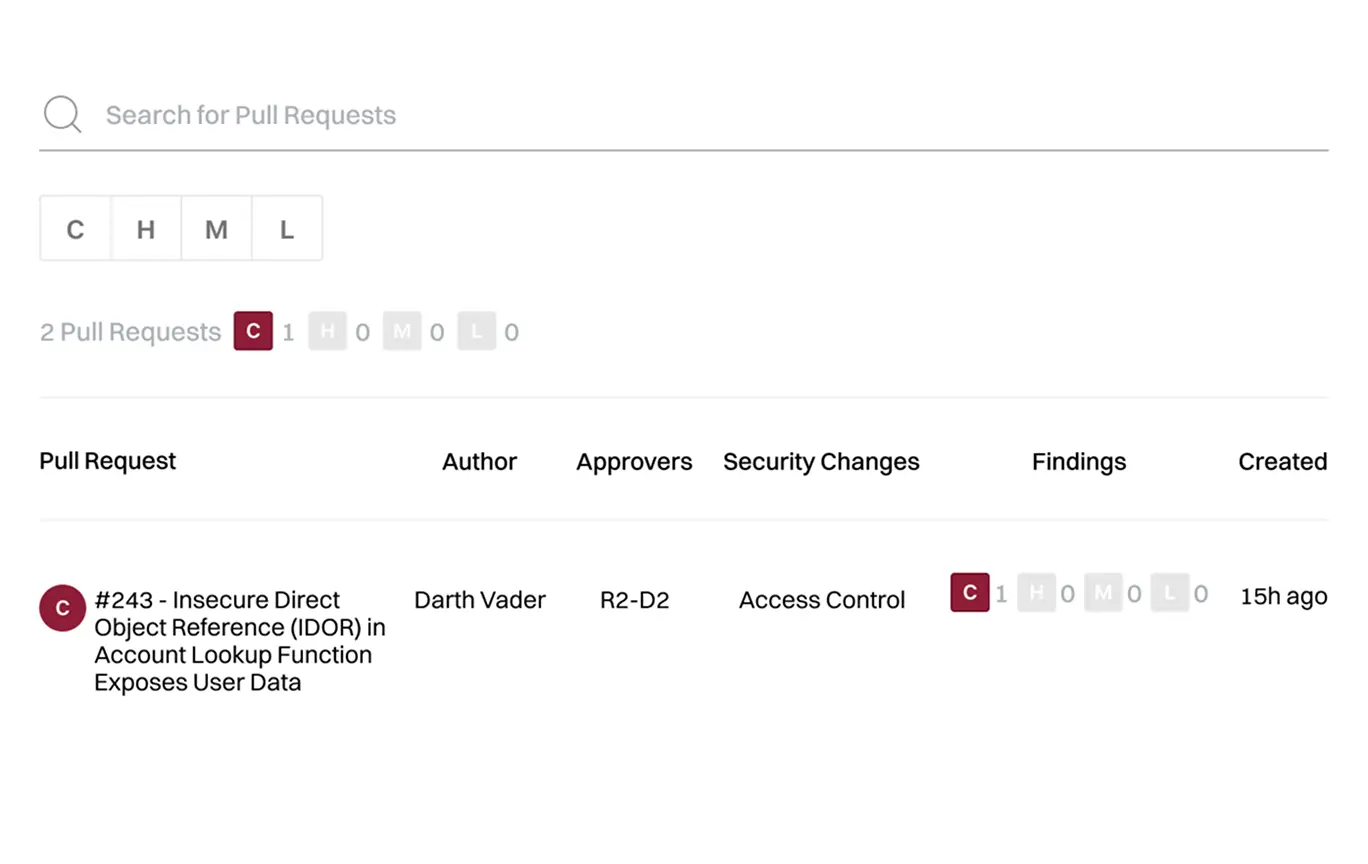

Fix issues at the source

Integrate directly into AI code editors to help developers and agents fix code before their first commit.

Securing code written by humans and AI at:

Software analysis is hard, and there's only one company [Endor Labs] that's doing it correctly.”

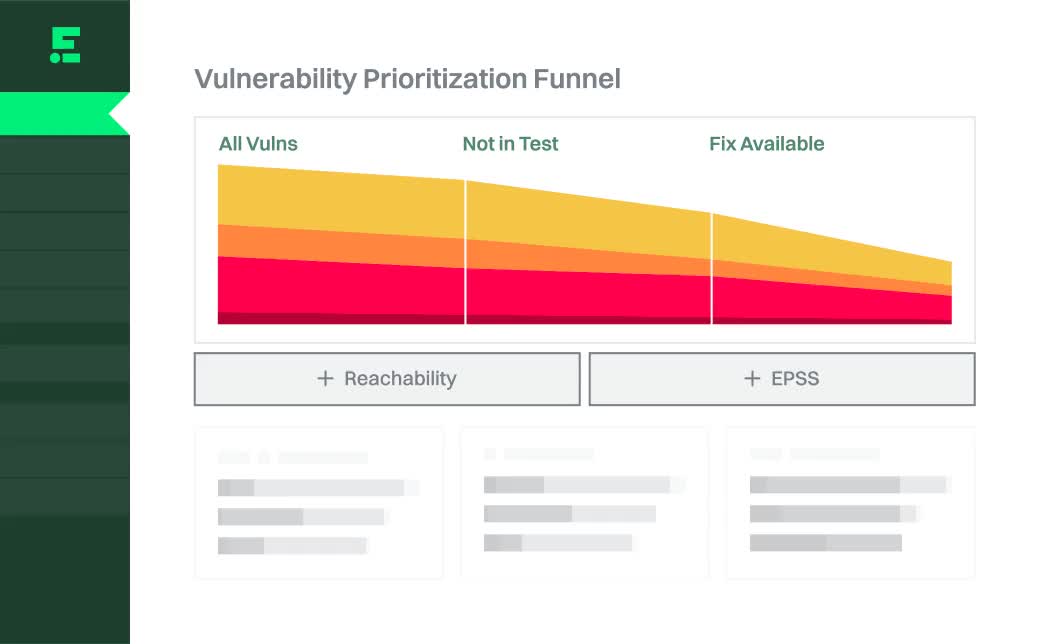

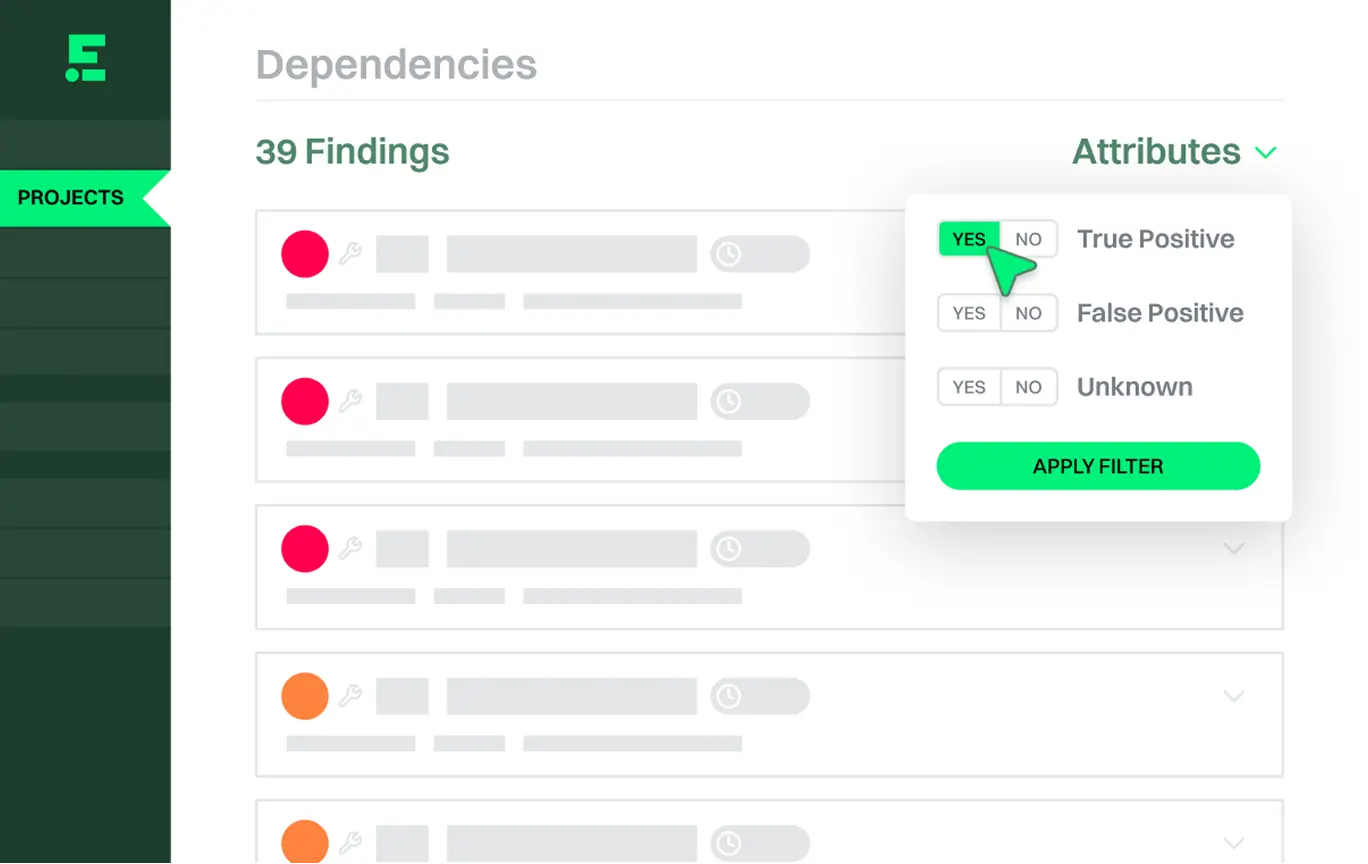

Dashboards

Monitor severity and risk trends and identify projects with the greatest risk exposure in your business.

Multi-file, multi-function analysis

Understands authentication guards and framework-specific sanitization, improving accuracy and reducing false positives.

Custom prompts

Add custom prompts to catch risks unique to your codebase, no training or specialized language required.

40+ languages supported

Java, Javascript, Python, C#, C/C++, Go, Kotlin, TypeScript, Ruby, Rust, JSX, PHP, Scala, Swift, Terraform, and more.

%20(1).avif)