The empirical study conducted by Xia et al. summarizes the expectations of interviewees and survey participants regarding SBOMs in several findings, one of them being that “SBOM generation is belated and not dynamic, while ideally SBOMs are expected to be generated during early software development stages and continuously enriched/updated.”

This dynamicity is also anticipated by the NTIA, which states that SBOMs can be created “from the software source, at build time, or after build through a binary analysis tool”. However, they also note that those SBOMs “may have some differences depending on when and where the data was created”.

Those considerations were motivation enough to run a simple case study: What if we run several open source SBOM generators at different lifecycle stages and compare their results?

TL;DR - The case study showed that SBOMs generated by different tools at different lifecycle stages differ significantly and are hardly comparable. The lack of comparability is caused by different detection capabilities of SBOM generators, but also due to the level of details included in Package URLs, an emerging and unified standard for component identification.

Ground Truth

In order to compute the performance of those SBOM generators, it is important to know the ground truth, which in our case means to know a product’s actual software bill of materials. Otherwise, how would we know whether the components reported by those tools are truly contained in the product (true positive findings) or not (false positives), and whether there are any product components missing altogether (false negatives).

The impact of false positives and false negatives on software consumers is evident: The former potentially waste resources, e.g., if security response processes are triggered for vulnerabilities in a wrongly reported component. The latter becomes dangerous if vulnerabilities get disclosed for non-reported components. Consumers get exposed without even knowing about it.

For the case study at hand, we chose Eclipse Steady v3.2.5, a program analysis tool developed with Java. More specifically, we focus on one of its modules called “rest-backend”, a Spring Boot application running inside a Docker container. The source code of the module is available on GitHub, the Docker image can be found on DockerHub.

Being developed with Apache Maven, a prominent build tool and dependency manager for Java, we ran the Apache Maven Dependency Plugin (mvn dependency:tree -pl rest-backend) on the module to obtain the ground truth, i.e., the list of all module dependencies: It turns out that version 3.2.5 has 114 compile, 2 runtime and 41 test dependencies. A previous blog post explains Maven and dependency scopes in more detail, in case you’re not familiar with it.

Approach

Once we knew what to expect from the SBOM generators, we proceeded as follows…

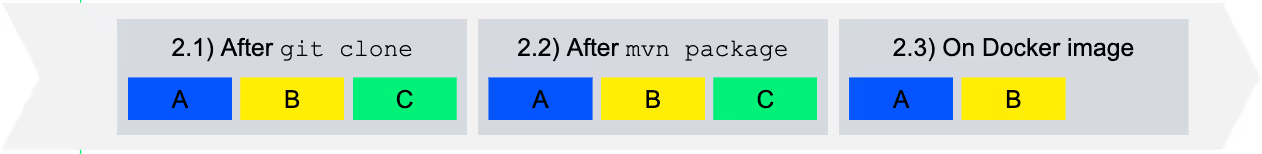

We chose three different open source SBOM generators1 (tool A, B and C). Tools A and B are generic tools able to scan single files, entire folders or Docker images. Tool C is a plugin for Maven, thus, tightly integrates with the dependency manager and not suited to analyze other things.

Those three tools are run on the sources of Eclipse Steady 3.2.5, after the build of the Maven module “rest-backend” and on the Docker image available on Docker Hub (again, with the exception of tool C, which cannot analyze Docker images).

Running those tools as described results in 8 SBOMs, all in CycloneDX format, which are evaluated in regards to three aspects.

First and most importantly, we compute precision and recall by comparing those SBOMs with the ground truth:

- Precision is the ratio of correctly identified components (true positives) to all identified components, both correct and incorrect ones (true positives and false positives respectively). Again, a false positive corresponds to a component that is listed in the SBOM even though it is not part of the software. The lower the precision the more false-positives and vice versa.

- Recall is the ratio of correctly identified components (true positives) to all components of the software under analysis (the ground truth). The lower the recall, the more components were not correctly identified (false negatives) and vice versa.

For both precision and recall we say that an SBOM component is correctly identified if it has the correct Maven coordinate or GAV, which is composed of three elements: groupId, artifactId and version. If any of those elements is wrong or missing altogether, we say that the component is not correctly identified (false positive).

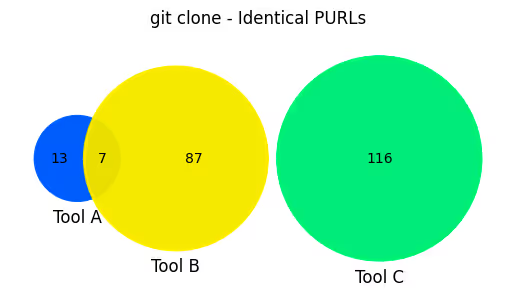

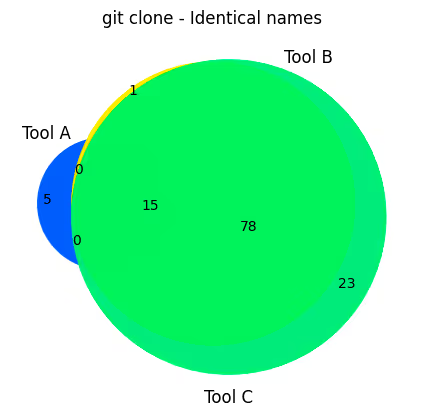

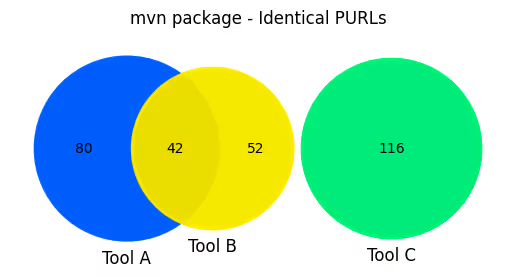

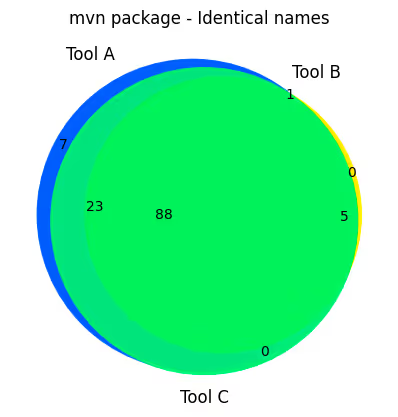

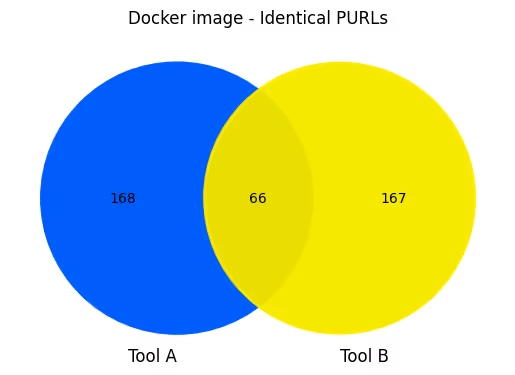

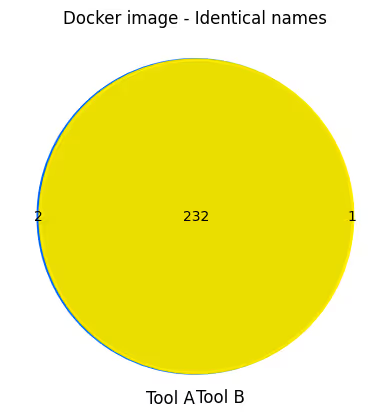

Second, we compare the SBOMs among each other to investigate whether and where they agree or disagree on the identified components. To this end, we generate two Venn diagrams for each of the three life cycle stages:

- The first Venn diagram shows the intersection of identified components when considering their PURLs. The Package URL (PURL) is a unified, ecosystem-agnostic component identification scheme that got a lot of traction in recent years, and which is present in the SBOMs of all three tools.

- The second Venn diagram shows the intersection when considering the component name only, thus, ignoring other important parts of a component identifier such as the version.

As PURLs play an important role in the SBOM comparison, note that they compromise up to seven elements (some required, others optional):

Qualifiers, in particular, can be (and are) used to encode additional details. Example PURLS for a Maven package and a Debian package are as follows (note how qualifiers are used to denote the file type or platform architecture):

Third, we quickly look into other properties included by the SBOM generators helping consumers to use the SBOM for the intended purpose, i.e. software supply chain risk management.

Evaluation Results

SBOMs after git clone

After cloning the Git repository of Eclipse Steady, tools A and B were run on the subdirectory of the rest-backend module. Tool C was invoked by starting the respective Maven command on the module (mvn … -pl rest-backend).

The precision and recall of the three SBOM generators were as follows (the best tool is highlighted in bold font):

- Tool A: precision = 0.5, recall = 0.06

- Tool B: precision = 0.93, recall = 0.75

- Tool C: precision = 1.0, recall = 1.0

With both precision and recall of 1.0, tool C identifies all components perfectly, with neither false-negatives nor false-positives. This is supposedly due to its integration into the Maven build lifecycle, which provides immediate access to the results of Maven’s dependency resolution process.

Tool A performs worst, with only 20 components being identified. Those comprise dependencies mentioned in the module’s pom.xml, including ones with test scope, as well as test archives present in the module’s src/test/resources folder. But even though the pom.xml is processed, transitive dependencies are not resolved, and neither are version identifiers. In summary, this results in both false positives (due to missing version information) and false negatives (e.g., for all the transitive dependencies).

Tool B, on the other hand, resolves the pom.xml file and ignores test resources. However, some of the versions are incorrect (those components are counted as false positives), and other dependencies are missing altogether (for unknown reasons), which correspond to false negatives.

The two Venn diagrams below show the intersection of components when considering complete PURLs (left) and the names only (right). The lack of intersection between Tool C and the other tools is due to the fact that its PURLs contain additional information that is not produced by the others, namely the qualifier type=jar. When only considering the component name, thus, ignoring the Maven groupId and version, the intersection of all three sets increases significantly, because A’s lack of version information, B’s wrong version information and C’s qualifier are all omitted.

However, it is important to note that the name is not sufficient to search for known vulnerabilities affecting the component (e.g., on OSV) or for component updates (e.g., on Maven Central). In other words, the name is the smallest denominator.

In regards to additional data being collected:

- Tool A includes SHA-1 digests, information on why a component is shown, e.g., pointing to a file, and (many) CPE identifiers that are supposedly used when searching for vulnerabilities in the NVD.

- Tool B does not include any additional information.

- Tool C contains license information, several digests, external references such as to the homepage of the open source project producing the respective component and a description (all of which is probably taken from the pom.xml files of the Maven dependencies).

SBOMs after Maven Package

After running mvn package -pl rest-backend, the tools were run in the same way as before, thus, tools A and B were pointed to the module’s folder and tool C was invoked using Maven.

The SBOMs generated by tools B and C did not change at all, which indicates that they solely rely on the pom.xml to compute the SBOM. Tool A, on the other hand, identified many more components, which suggests that it found the result of the Maven build, a self-contained and executable JAR in the module’s target directory.

- Tool A: precision = 0.56, recall = 0.44

- Tool B (as before): precision = 0.93, recall = 0.75

- Tool C (as before): precision = 1.0, recall = 1.0

In terms of absolute numbers, tool A identified the most components (130 components in total, 122 unique ones), which is due to the fact that it also collects test dependencies. However, when looking at the SBOM, those test components cannot be distinguished any longer from other components, which makes SBOM consumers treat them in the same way as all other components.

The Venn diagram computed on the basis of PURLs (left) shows that the PURLs created by all tools differ significantly, which is best explained by means of an example. The rest-backend module depends on a Maven artifact with the following coordinates (GAV): (groupId=org.dom4j, artifactId=dom4j, version=2.1.3).

Tool A includes it in the SBOM with PURL pkg:maven/dom4j/dom4j@2.1.3 (thus, a wrong groupId), tool B with pkg:maven/org.dom4j/dom4j@2.1.3 and tool C with pkg:maven/org.dom4j/dom4j@2.1.3?type=jar. While tool A is simply wrong, it is not straight-forward for consumers to understand whether the different PURLs reported by tools B and C make any practical difference in terms of software security or supply chain risk.

As before, when intersecting components only using their names, the overlap is much more significant.

SBOMs for Docker Image

Finally, we ran tools A and B on the Docker image of the module, which resulted in a number of additional OS-level components compared to the previous runs.

Precision and recall were computed considering only those SBOM components whose PURL starts with pkg:maven (since OS-level dependencies are not part of the ground truth, they would have resulted in false-positives).

- Tool A: precision = 0.59, recall = 0.55

- Tool B: precision = 0.96, recall = 0.91

Tool B performed significantly better than A considering those metrics. Still, tool B still missed two important components found by tool A, namely the Java runtime and one of its libraries:

And what we have discussed above regarding the use of different PURL identifiers for the same Maven dependency can also be observed when looking at OS-level components: Both tools differ in regards to qualifiers as exemplified with the PURLs created for apt (differences highlighted in yellow):

As before, this reduces the intersection of the PURL-based Venn diagram (left), while name-based Venn diagrams - though of limited use - show a much more significant overlap.

Concluding Observations

The case-study resulted in several important observations:

First, using the same tool at different lifecycle stages has an impact on the SBOM accuracy. Tool A, for instance, performed worst when being run on the sources, and best when run on the Docker image.

Second, the tool integrated into Maven produced perfect results. The two generic tools, which identify components “from the outside”, struggled to correctly determine all elements of Maven’s identification scheme (and lacked some components altogether).

Third, the SBOMs produced by different tools are hardly comparable - even though they all include PURLs to identify SBOM components. Besides wrong identification by the generic tools, another reason were additional details added by Tool A (for Debian packages) or Tool C (for Maven dependencies).

The last point raises one interesting question: What is the practical value of such qualifiers in regards to supply chain risks and software security? If there is one, such qualifiers should be included by all SBOM generators, e.g., the platform architecture or the Maven type. In this regard, we hope that a future version of the NTIA document specifies minimum fields per ecosystem in greater detail (i.e. required elements of the respective naming scheme). And if there’s no added value, the qualifiers should be omitted to not confuse SBOM consumers and make SBOMs comparable.

Fourth, SBOMs should specify whether a given component is present in the software product itself, or whether it has been used for its development, build or distribution - such as the test dependencies that tool A included by default, but which could not be distinguished any more in the SBOM. Those dependencies are definitely security relevant - any upstream component involved in development, build or distribution of software is. However, issues affecting such dependencies require different remedies than, for example, vulnerabilities in components that run in production.

Benchmark

Even though we only ran a small case-study, it left the impression that the accuracy of SBOM generators differs largely from one tool to another, and that it is very difficult for SBOM consumers to evaluate whether what they receive is actually correct.

The experiment was deliberately done using an open source application that we know very well and whose sources are publicly accessible. A comparable analysis is impossible for proprietary software products - as a result of which SBOM consumers cannot do more than blindly trust the vendor’s SBOM.

Going forward, it will be important to create a benchmark that SBOM generators can be evaluated against. It should cover different programming languages and different layers of the technology stack. Evaluation results of different SBOM generators (and versions) could be published such that the industry gets confidence in their capabilities. And the next time a consumer receives an SBOM from its vendor, she can check whether it was created with an SBOM generator of acceptable quality…

40+ AI Prompts for Secure Vibe Coding

What's next?

When you're ready to take the next step in securing your software supply chain, here are 3 ways Endor Labs can help: