As I shared recently, some of the industry’s most cited and referenced reports of the year dropped. These include Verizon’s DBIR, Mandiant’s M-Trends, and Datadog’s State of DevSecOps.

Vulnerability exploitation is on the rise

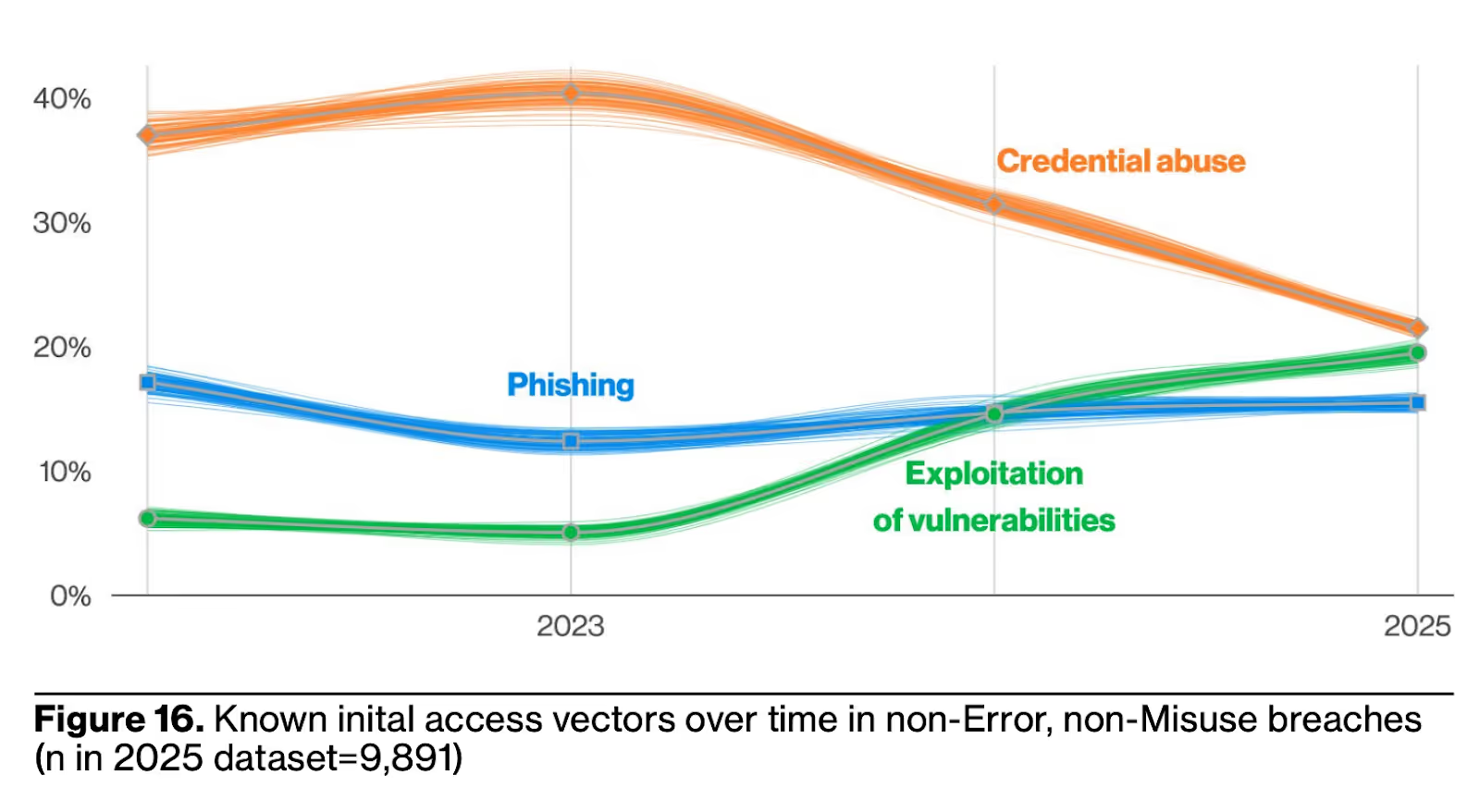

While each report is comprehensive and covers multiple problem spaces, areas, and topics, they all touch on trends and AppSec. One trend all of them shared is that vulnerability exploitation is on the rise, overtaking phishing in cases such as the DBIR, which has historically enjoyed a leading spot, alongside credential compromise.

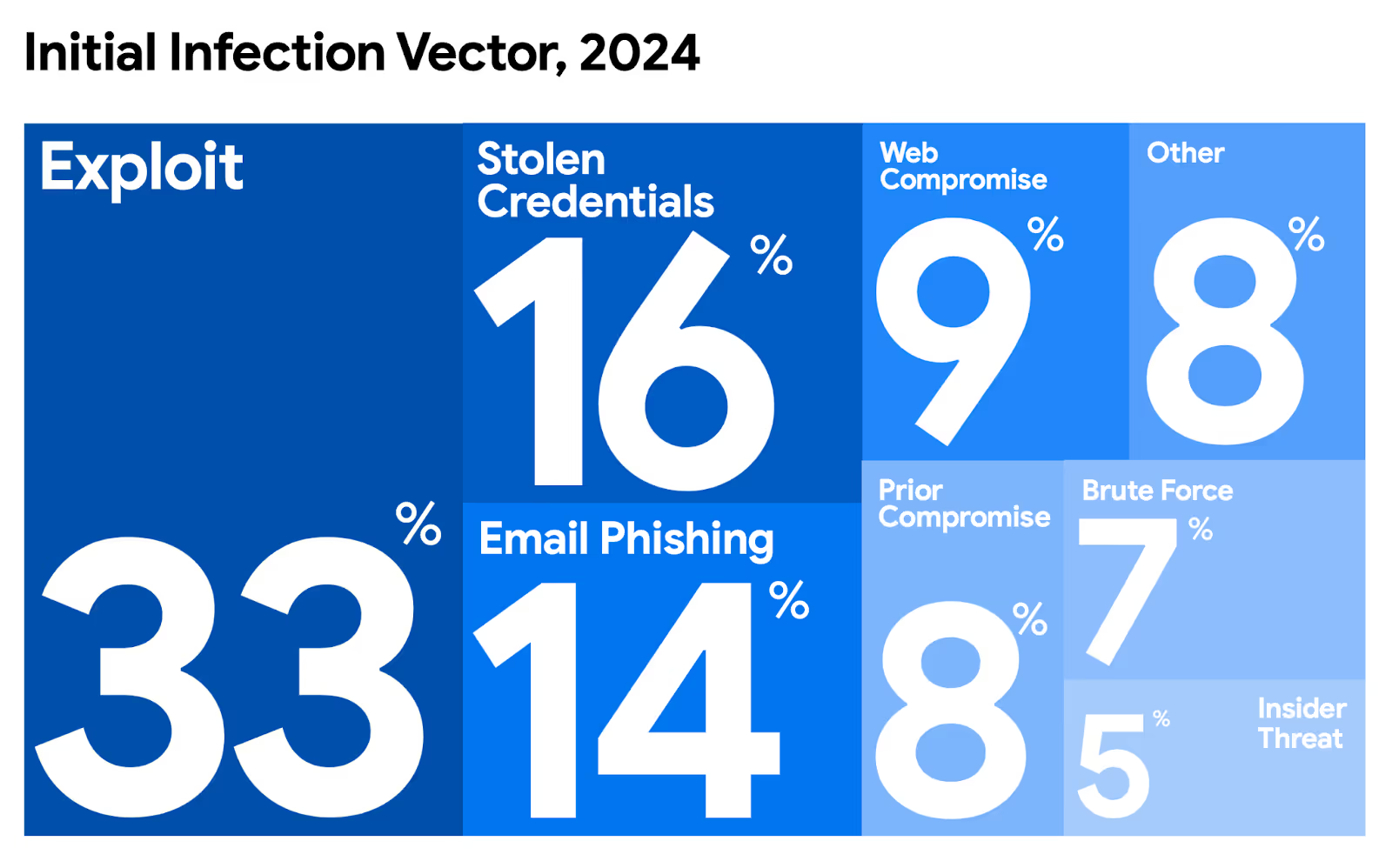

This comes after DBIR’s report in 2024 dubbed things as the “vulnerability era”, meaning the problem got worse a year after that title. Mandiant’s M-Trends report had a similar finding, with the initial infection vector in 2024 being vulnerability exploitation:

So we see that attackers are not only having more success with vulnerability exploitation as a primary attack vector, but are also focusing on it as one of their primary exploitation methods.

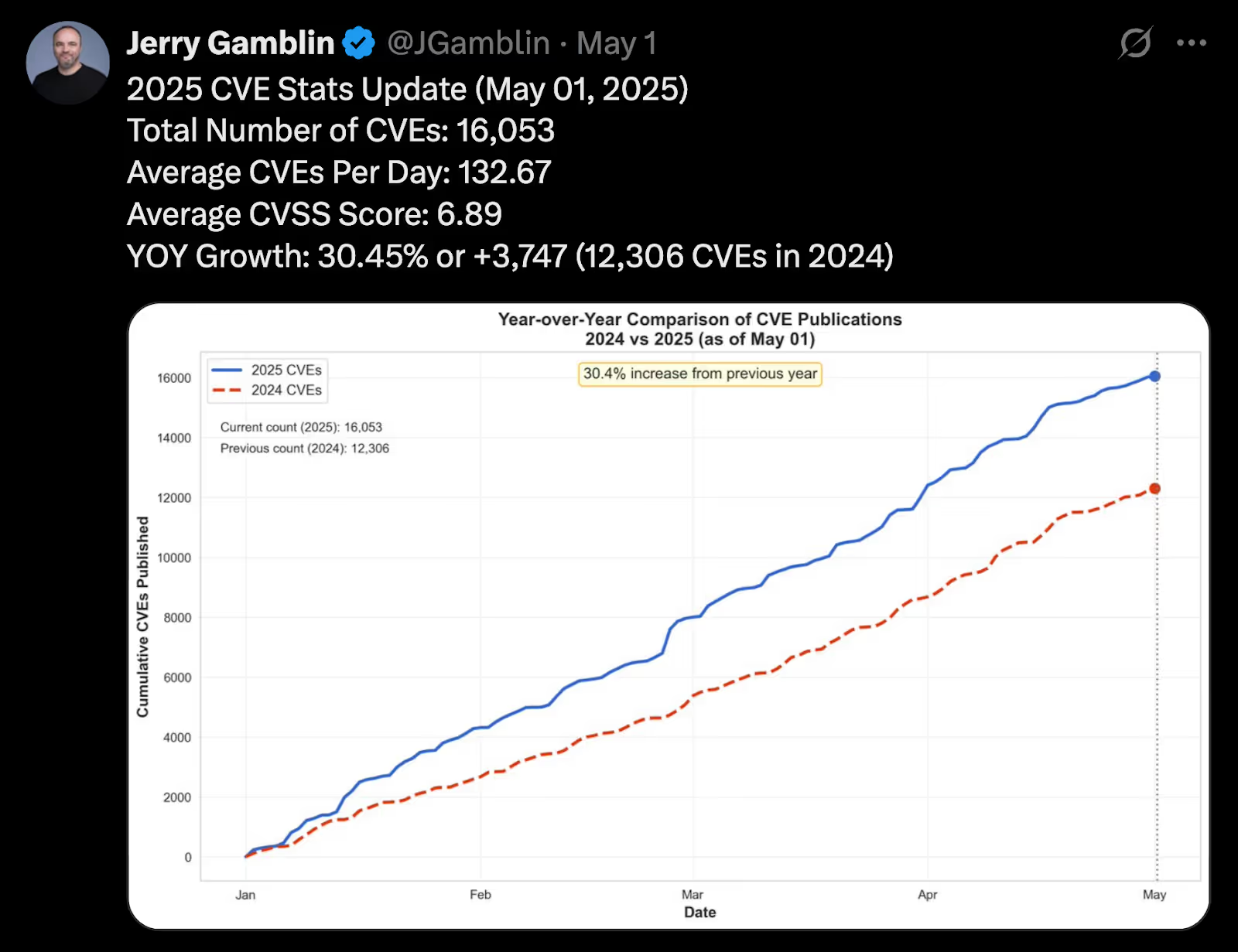

When you flip it around and look at defenders, we have the opposite outcome regarding vulnerability remediation. We’re struggling more than ever to keep up with the pace of vulnerabilities, drowning in massive vulnerability backlogs, and trying to use context to signal the noise.

As Jerry Gamblin, a vulnerability researcher I often cite, shows above, we’re seeing 30% year-over-year (YoY) CVE growth today. That is without the fact that the NVD has a historical 39,000 backlog of unenriched vulnerabilities.

Why traditional AppSec can’t keep up

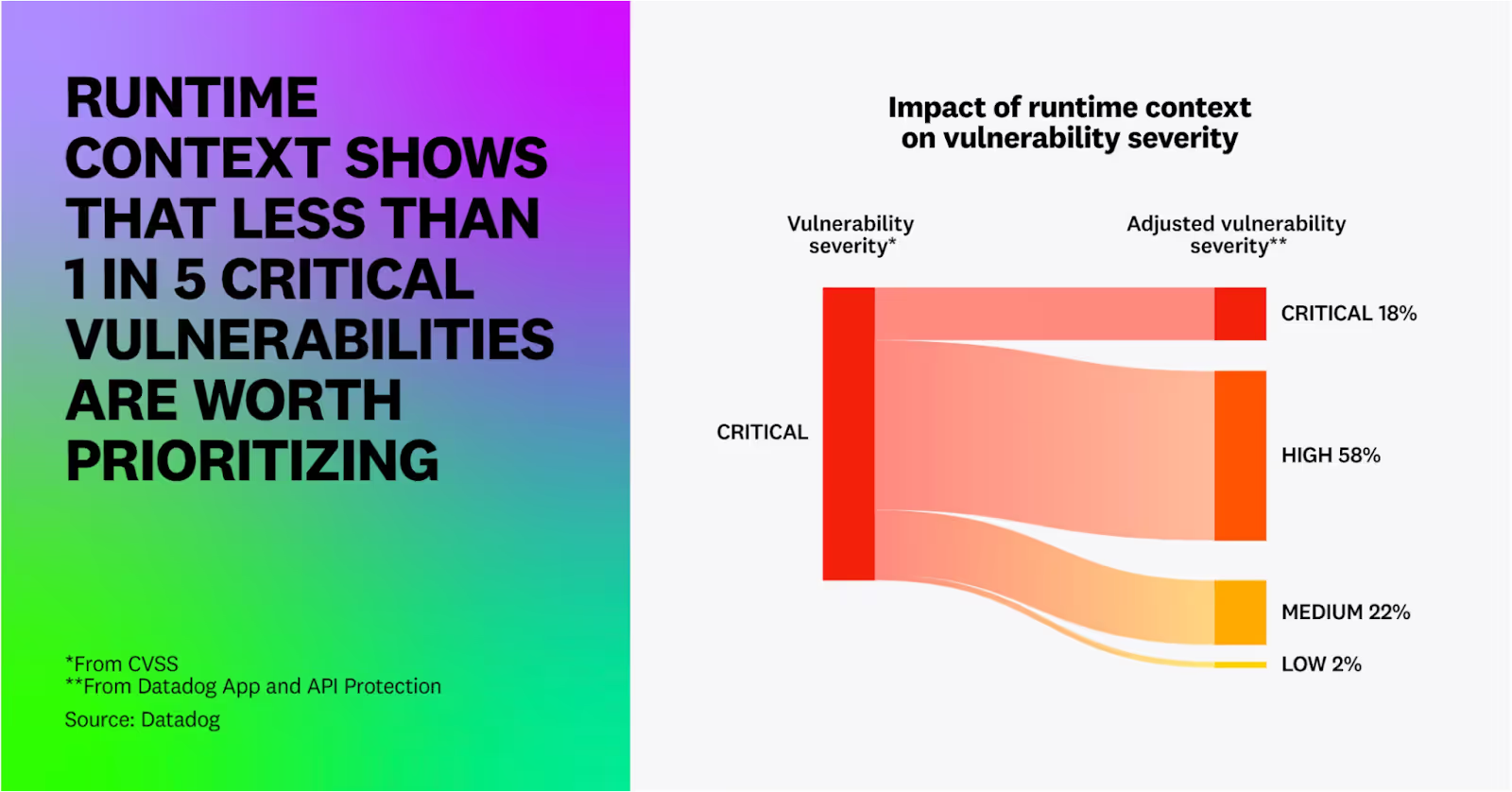

Despite this massive growth in vulnerabilities, organizations struggle to know what to remediate, as we know less than 5% of CVEs in a given year are exploited. Organizations often waste insane amounts of time and labor forcing development and engineering peers to remediate vulnerabilities that pose little actual risk. Yet, we wonder why they hate us and dread engaging with us.

Datadog’s report helps substantiate this, showing only 20% of “critical” (don’t get me started on the nonsense of CVSS base scores) are exploitable at runtime.

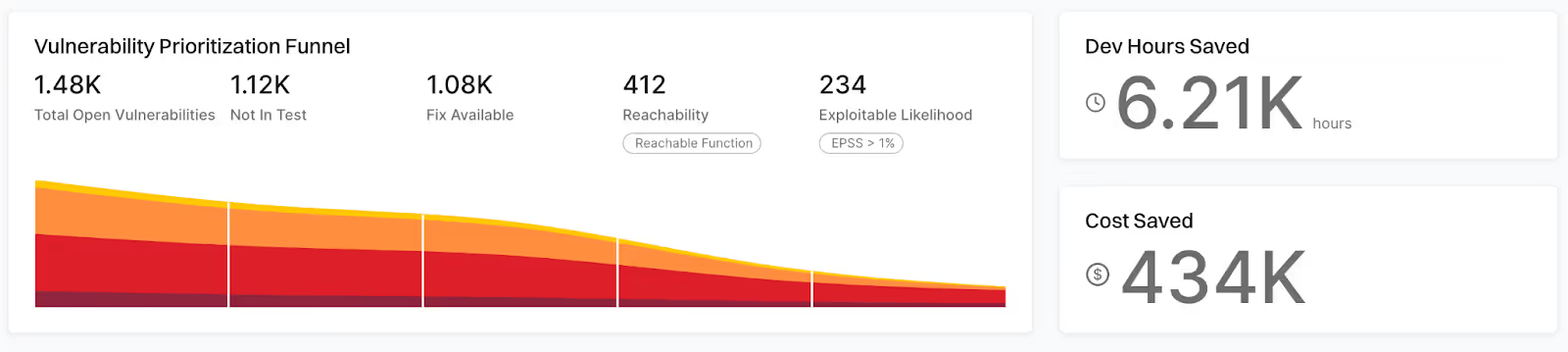

Other firms, such as Endor Labs, have shown similar findings via reachability analysis, showing that 92% of vulnerabilities, without context, such as those that aren’t known to be exploited, likely to be exploited (e.g. EPSS), reachable, have existing exploits/PoCs, have existing fixes, and so on, are purely noise.

AI-Driven development is accelerating the gap

Traditional vulnerability management approaches burden our development peers with this toil. This doesn’t account for developers now leaning into AI-driven development tools and copilots, experiencing 40%+/minus productivity boosts. Many are now claiming AI is writing outsized portions of code and soon may write nearly all of it.

I covered this point in a recent article titled “Security’s AI-Driven Dilemma: A discussion on the rise of AI-driven development and security’s challenge and opportunity to cross the chasm”.

As I tried to lay out in that article, the idea that we will continue to apply our methods of the past, even in eras such as “shift left” and DevSecOps, where we threw a bunch of noisy, low fidelity tooling into CI/CD pipelines and then dumped massive lists of findings without context onto developers will be successful in the age of AI-driven development and where software development is headed is outright foolish.

As I laid out above, what got us “here”, which isn’t in a good place, certainly won’t get us “there”, or to a more secure and resilient digital ecosystem, which we’re all ultimately aiming for.

The truth is we need to be early adopters and innovators with AI, or we risk not only continuing to fall behind but also perpetuating the problems of the past, which are drowning in vulnerabilities, breaking relationships with our development peers, and not resembling anything close to being a “business enabler” that security likes to LARP around as. We know our development peers are using this technology, which is fundamentally changing software development. We also know that attackers are experimenting with AI.

As I wrote in a recent article titled “Security’s AI-Driven Dilemma,” LLMs and coding assistants are fundamentally changing the nature of modern software development. This includes projections of double-digit productivity boosts (e.g., higher code velocity) and writing 40% +/—of all new code in some organizations. It’s interesting to note that while the topline number is 26% increase in productivity, senior developers are in the range of 8-13% while junior devs saw 27-39% increase in productivity

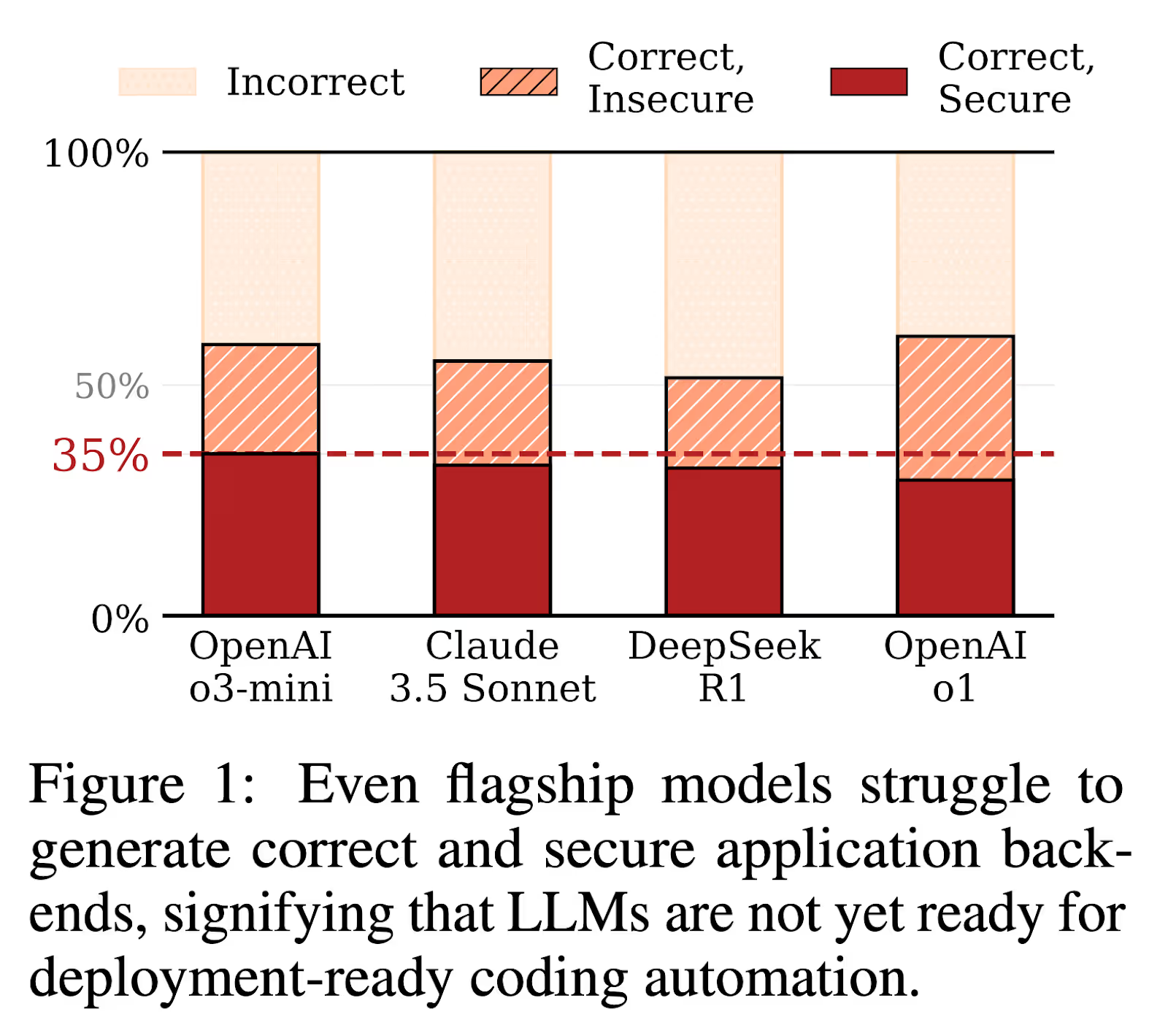

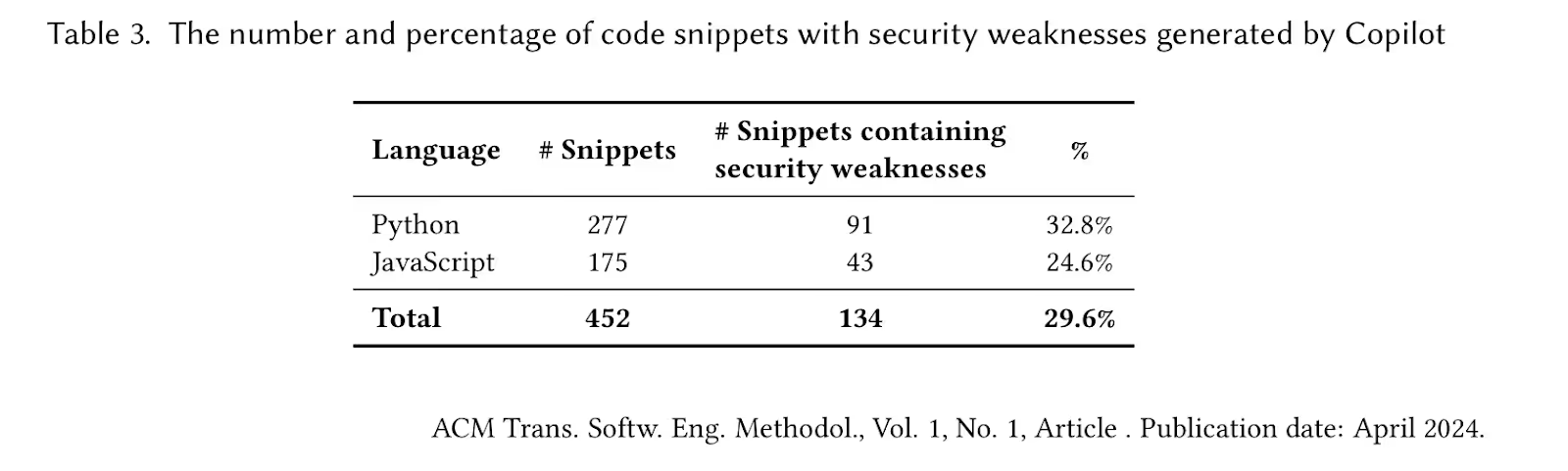

This means shorter iterations, faster development and deployment cycles, and a growing vulnerability backlog that security couldn’t keep up with. This in and of itself is a problem, but research now shows that roughly 62% of the solutions generated by AI aren’t correct and/or contain security vulnerabilities. Another study found that 29.6% of AI-generated code snippets have security weaknesses.

While this is concerning, it shouldn’t be surprising, given that these frontier models and coding assistants are trained on large bodies of open-source code, which also inherently have flaws, weaknesses, and vulnerabilities. And we can’t ignore the consistent rise in CVEs that we’ve been seeing over the last few years. The projects that introduce these CVEs are also used to train new models.

Despite these vulnerabilities being commonplace in AI-driven development and coding assistant output, developers inherently trust these snippets of code and outputs for ease of use, velocity, and convenience. Remember, speed to market and revenue have always superseded security, especially in most organizations where developers are evaluated based on how fast they move and how productive they are, not how secure their outputs are.

This means the age-old model of security being a “developer tax” and injecting low-fidelity, poor-quality “findings” via low-context tooling into their workflows will just lead to friction, workarounds, and a growing vulnerability backlog of noise that never gets optimized or addressed.

How AppSec must evolve

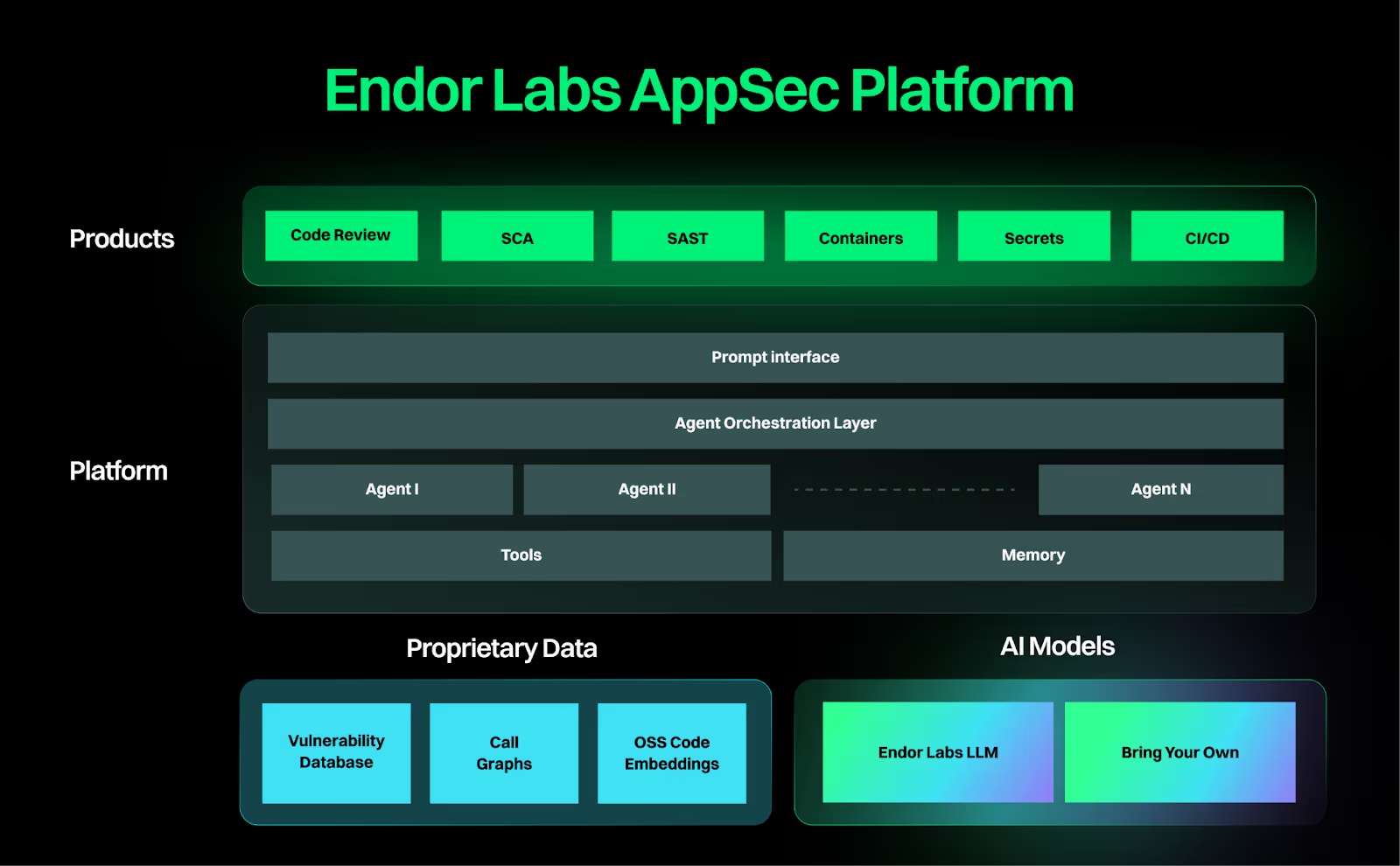

This is why Endor Labs is leaning in, as the AppSec platform for the AI-era, with a proprietary vulnerability database of deep context on open source security, going well beyond known vulnerabilities and providing leading indicators of risk as well, such as the OWASP Open Source Software (OSS) top 10, as well as critical capabilities such as reachability analysis to help organizations prioritize real-risks as opposed to noise and toil.

As discussed above, AI-driven development is poised to change software development fundamentally. It also offers an incredible opportunity to change how we approach security systemically. The chance to create vulnerable code may be amplified, but so is the opportunity to fix findings.

For example, LLMs and coding agents can help guide developers in making security fixes or even fixing vulnerabilities and flaws. At the same time, the code is being written, mitigating the need to disrupt flow, change tooling, shift cognitive load, and more, truly leaning into a developer-first security model. Of course, this requires quality data related to vulnerabilities and flaws to be provided directly to developers natively in the tools and workflows they’re operating in.

Historically, IDE plugins didn’t directly provide this data, relied on unwieldy fuzzy rules to curate and maintain, or were inaccurate in resolving issues, often leading to significant false positives, especially in SCA and SAST.

While Endor Labs understands the risks associated with AI-driven development, we also understand the potential. This means we aren’t perpetuating problems of the past where security is a late adopter and laggard with new technologies, but instead is leaning in as an early adopter and innovator.

Clear examples include our Endor Labs MCP Server, which natively integrates with AI coding tools such as GitHub Copilot and Cursor and lets security embed analysis and feedback natively into developer workflows, not breaking flow, and avoiding imposing the bolt-on rather than built-in security developer tax of ages past.

We also launched AI Security Code Review, which provides a multi-agent approach for detecting security design flaws at-scale.

It involves a multi-agent AI system that analyzes each pull request (PR) with the context and insight of an experienced AppSec engineer to detect changes that may impact the application and organization's security posture. This is key, especially because AppSec has historically been significantly outnumbered by developers and engineers in large enterprise environments. This leverages AI as a force multiplier for security, much like our development peers who are using this promising technology for enhanced and accelerated development.

Closing Thoughts

As I discussed above, the state of AppSec is a bit dire, with runaway vulnerability counts, exponentially growing vulnerability backlogs, and security’s continued inability to keep pace with modern development.

To top it all off, this was BEFORE the rapid ascent and adoption of AI-driven development, which is poised to exacerbate the problems. This reality serves as a call to action for AppSec and security to lean into these innovative technologies, much like our development and business peers and adversaries.

What got us here won’t get us to where we need to go, especially in the age of AI. We find ourselves at a crossroads: to continue the methodologies of the past or pivot to innovative approaches that can address systemic challenges and truly position security as a business enabler.

40+ AI Prompts for Secure Vibe Coding

What's next?

When you're ready to take the next step in securing your software supply chain, here are 3 ways Endor Labs can help:

.avif)