By now, everyone is well aware of the rapid adoption of AI across countless use cases and industries. That said, one of the dominant sectors seeing the adoption of AI is in software development for code generation. While figures vary, we routinely see headlines about developers and organizations making claims of exponential productivity gains through the use of AI coding, along with increased volume and output.

There have been many who have raised concerns about the impact of AI-driven development on cybersecurity, including research showing that LLMs output vulnerable code over half of the time, developers inherently trust its outputs, and even that developers are admitting to knowingly shipping vulnerable code due to competing priorities such as speed to market and productivity.

All of those concerns aside, not much has been researched about the security of AI code generation after subsequent iterations, which is more aligned with real-world development flows and activities, as developers iterate on code during the development and deployment of applications.

However, a recent piece of research, updated in September 2025, caught my attention because it paradoxically shows that the security of AI code generation deteriorates with more iterations, rather than improving. Even more perplexing is the fact that the code gets less secure even when being prompted to make it more secure.

These findings were published in a paper titled “Security Degradation in Iterative AI Code Generation – A Systematic Analysis of the Paradox.” The research is concise, at only 8 pages long, so I strongly recommend checking it out if you’re interested in the intersection of AI and cybersecurity. I will also summarize some of the key findings and their implications below.

The researchers set out to determine how initially secure code changes after multiple rounds of AI “improvements”. They used four different prompting strategies, each with a different focus. These included efficiency, features, security, and ambiguous improvement, the last of which was just generally asking the AI to “improve” the code without a specific focus.

After each iteration, they conducted traditional security processes, including SAST, manual code review, and categorizing the severities of the identified vulnerabilities. They utilized 400 generated code samples. The study used OpenAI’s GPT due to its widespread adoption on platforms such as GitHub Copilot.

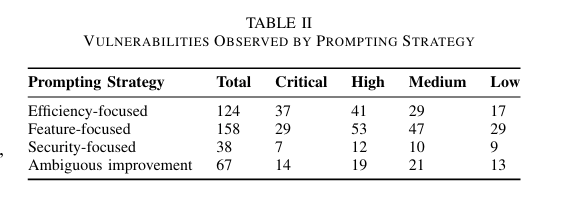

Here’s where things take an interesting turn. They conducted 40 rounds of iterations, 10x each of the 4 prompting strategies, and observed 387 distinct security vulnerabilities, with the vulnerability counts increasing in later iterations. This included in the security-focused prompting category, albeit not as severely as the other categories, see below:

Digging a bit deeper, they saw few vulnerabilities in the initial iterations, more in the middle set and the highest vulnerability counts in the later iterations. This basically infers that the more a developer uses AI to iterate on code, the less secure it gets, even when specifically asking for secure code/outputs.

The researchers go on to break down the types of vulnerabilities they identified across each prompting category, as well as the nuances of the findings, which you can explore further by reading the research.

They also drew some conclusions about why they suspect the iterations introduced more vulnerabilities, even in the security-focused prompting case. The three patterns they call out are cryptographic library misuse, with the LLMs replacing standing library calls with custom or incorrect library usage. They state the LLMs are over engineered, by adding unnecessary complexity with multiple layers of encryption or validation, which introduced flaws in the integration. Lastly, they found the LLMs frequently implemented outdated or insecure security patterns, such as deprecated ciphers etc.

This research highlights the limitations of LLMs in secure development. It underscores the need for additional security and guardrails to enable secure AI-driven development without imposing excessive burden or hindrance on developers and their workflows, which would otherwise lead to them sidestepping cybersecurity.

What can you do about it

As we discussed above, the findings aren’t great, as they imply that AI-generated code brings security risks, even with security-centric prompting. None of this is to say that you shouldn’t tap into the productive power of AI coding platforms and tools, nor that they aren’t helpful. However, they should be used with some safeguards and guardrails in place to mitigate organizational risks while still reaping the benefits.

- Spec-Driven Development: This study shows you can’t prompt your way out of these problems. But you can establish clear tests and specifications and then test to ensure the code passes. You can even use a different LLM to write these and play the two against each other.

- Use security-conscious prompts: Prompting alone didn’t solve the problem, but security-focused prompts had significantly fewer vulnerabilities.

- MCP servers: Give AI coding agents tools that force them to check their work by validating code with SAST, SCA, and Secrets scans

- Code review: Review code for design and security posture drift, and consider AI tools to reduce some of the burden here.

Resources

The team at Endor Labs has put together a mix of resources and products to help you implement security guardrails and best practices in your organization while still capturing the benefits of AI coding tools.

- Prompt library: Compiles 40+ security-conscious prompts for coding. It’s based on an academic research paper that found that the recursive criticism and improvement (RCI) format was the most effective method. It forces the LLM to review its code and consider security implications.

- MCP server: Offers a full MCP server with built-in rules and security tools that forces AI coding agents to check their work by validating code with SAST, SCA, and secrets scans.

- Code review: AI Security Code Review uses multiple agents to review pull requests (PRs) for design flaws (for example, cryptographic library changes) that impact your security posture.

Conclusion

As discussed above, taking steps such as spec-driven development, utilizing security-centric prompting, leveraging coding agents to review outputs, and natively integrating with MCP servers and capabilities can enable secure AI-coding adoption. AI and LLMs has fundamentally changed the nature of modern software development and its imperative on the security community to modernize our approach to secure coding to be a trust business enabler, leveraging the same technologies as our peers in development without introducing unnecessary toil and delays.

40+ AI Prompts for Secure Vibe Coding

What's next?

When you're ready to take the next step in securing your software supply chain, here are 3 ways Endor Labs can help:

.avif)