Over the last few months I’ve been experimenting with building personal projects at home with LLMs, and giving demos of the Endor Labs MCP Server. And one thing I kept running into—whether I was using VS Code, Cursor, Windsurf or any of the other AI coding editors—was that the models in these tools fairly consistently imported outdated open source dependencies.

Sometimes those dependencies had known vulnerabilities. Sometimes they didn’t. But in either case, the version being used was frequently months out of date. At first, I assumed this was just a quirk of the specific examples I was running. But the pattern kept showing up.

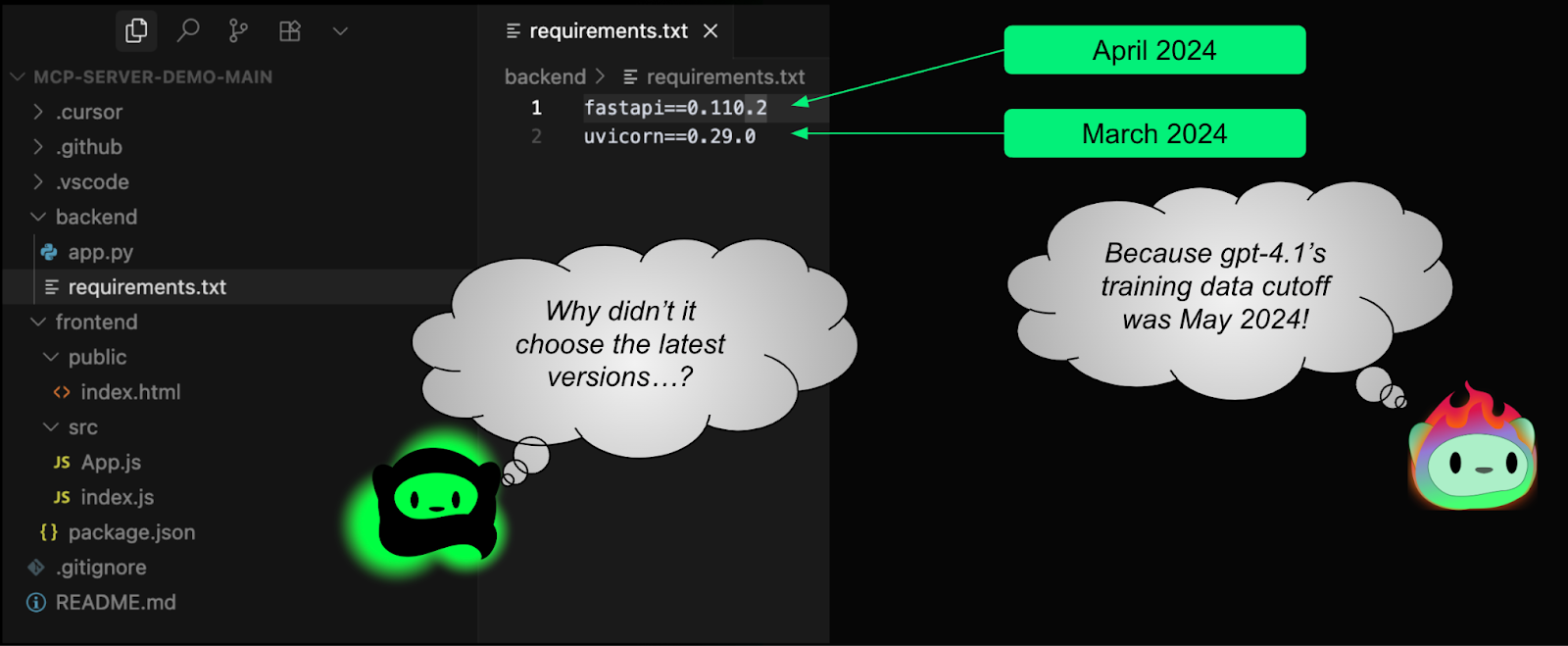

Eventually, I realized what was going on: these models were working off old training data. As a result they were pulling outdated versions of dependencies, including versions with known CVEs.

This is a much less sexy problem than slopsquatting and package hallucinations, but also a much more prevalent issue for developers and security teams alike.

LLMs are trained on a snapshot of the past

Large language models like GPT-4o and Claude 4 Sonnet aren’t constantly connected to the internet unless they are given tools. By default, they don’t fetch the latest version of a library, check for CVEs, or read the most recent release notes. They rely on their training data which can be months out of date.

This is a foundational limitation. Even with access to the internet these tools won’t necessarily look for the latest version of a dependency. They’ll default to whatever is in their training data.

As a result, if a dependency had a critical vulnerability disclosed yesterday, an LLM won’t know about it unless it’s augmented with real-time security context. And unless the tool you’re using explicitly compensates for that gap, the code it generates might be using outdated or insecure packages.

Case Study: Axios and CVE-2024-39338

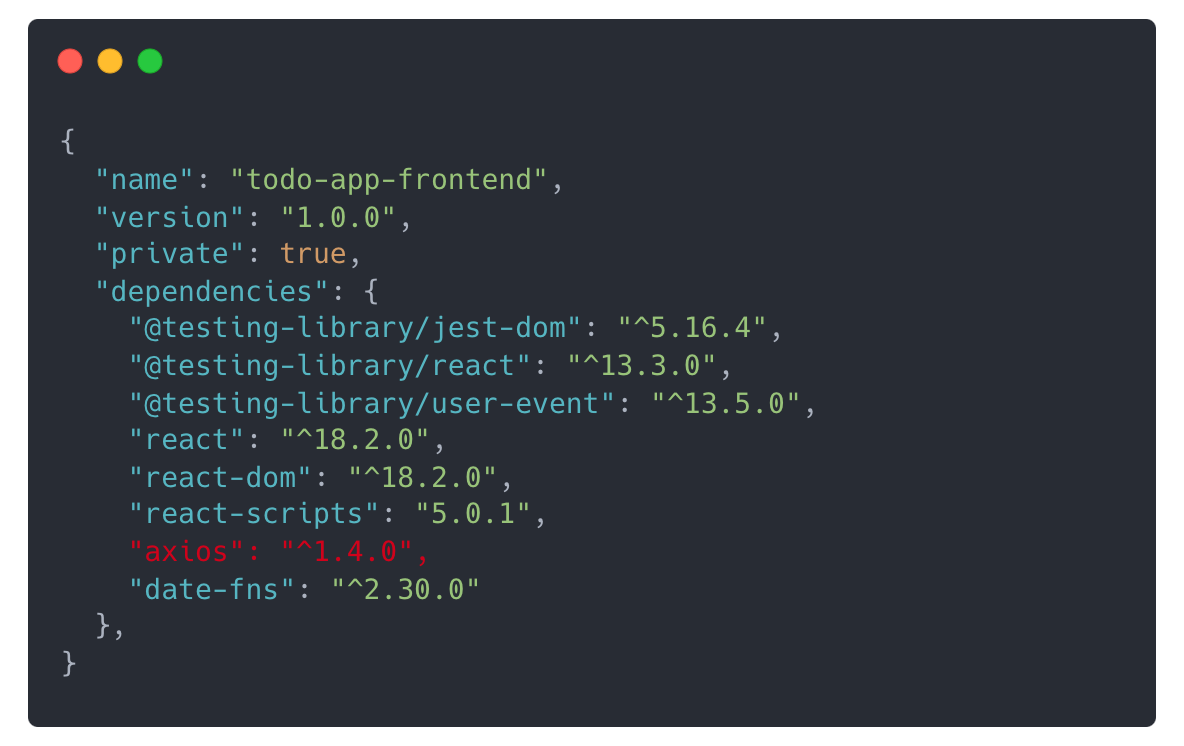

One dependency that kept showing up in my experiments was Axios, the popular HTTP client for Node.js. It’s a solid library, actively maintained, and widely used—but it also had a CVE published in August 2024.

I want to be clear: this isn’t a knock on the Axios project or its maintainers. They patched the issue. The problem is that the model in my code editor kept importing the vulnerability because the CVE was published after its cut-off date. As a result, it kept recommending an outdated version.

In my case it was often (but not always) getting imported as part of a demo that used the following prompt:

“Create a TODO list app with a React frontend and Python backend. The app must support creating Todo items with an expiration date and have the ability to delete items from the Todo list.”

When building the frontend, the LLM would add an outdated version to my package.json:

And to be fair, this isn’t just a problem with Javascript or React. Similar behavior happened when working with dependencies in Python and other languages.

Monitor training cut-off dates of popular LLM models

Unless these tools are augmented with real-time search or security context, the code they generate is only as current as the date of their training cutoff. This means security and engineering teams will have to monitor the training cut off dates of popular LLM models.

The chart below shows the cut off dates of some of the popular LLMs models used in AI coding assistants:

How to keep dependencies safe and up-to-date

Telling engineering teams to stop using LLMs isn’t a winning approach. But developers will need to increase their security awareness. And security will need to provide scalable guardrails.

Here are three strategies I’ve found helpful, from most granular to most scalable:

1. Prompt the model to look for the latest version

If you know exactly what library you want to use, a simple addition to your prompt can go a long way. Instead instructing the agent:

“Use Axios to make a GET request”

Give it some guidance:

“Use the latest secure version of Axios (as of July 2025) to make a GET request”

This tells the agent to verify the latest version using its tools.

2. Use rules to enforce dependency checks

Rather than prompting every time, you can add a rule file to your IDE configuration. For example, in tools like Cursor and VS Code you can add a rule that might look like this:

“Whenever you add or modify a dependency in package.json, always check for the latest version before adding it.”

Instead of the developer having to remember to prompt the agent every time, the agent will perform this automatically.

3. Use an MCP server for security context

Prompts and rules help, but often lead to uneven results because they rely on individuals to implement them or rely on decentralized tools like search engines. That’s where security tools like the Endor Labs MCP Server come in. They can provide up-to-date security context to the LLMs in tools like Cursor, VS Code, and Windsurf while the code is being generated.

That means even if the model suggests an outdated or insecure package, the MCP Server will guide the model to check its work and provide it with the latest secure version. It will also detect if your code is already using a vulnerable version, and help the agent upgrade it to a safe version that won’t break your code.

Conclusion

LLMs are amazing tools to accelerate software development workflows, but they require even greater security awareness than in the past. Treat their suggestions like untrusted code coming into your environment—it should be verified and tested without blind acceptance.

Contact us to speak with our team about how you can help software engineering teams safely adopt and roll-out AI code editors in your organization.

40+ AI Prompts for Secure Vibe Coding

What's next?

When you're ready to take the next step in securing your software supply chain, here are 3 ways Endor Labs can help: